Simulating and Modeling to Understand Change - Class Notes

Table of Contents

- 1. Programming (Python)

- 2. Introduction

- 3. Random Numbers Generation

- 4. Testing Randomness

- 5. Discrete Random Variable Simulation

- 6. Continuous Random Variable Simulation

- 7. Choosing the right distribution

- 8. Monte Carlo Method

- 9. Monte Carlo Simulation

- 10. Discrete Events Simulation

- 11. Model Design

- 12. Regression Models

- 13. Classification Models

New search feature! Make use of the amazing fuzzy search algorithm. Just type in the search box and it will find the closest match in the page. Hit Enter to jump to the next match. Lmk if it doesn't work for you.

1. Programming (Python)

- Optional Arguments

This is a great way to make code more flexible. It is used in function definitions like so:

def my_function(a, b, c=1, d=2): print(a, b, c, d)- Global Variables

This is a variable that can be accessed from anywhere in the code. It is defined outside of any function. Here is an example:

x = 1 def my_function(): global x x = 2 my_function() print(x)

1.1. Lists

- Adding normal lists

If we add normal, lists we get a new list with the elements of both lists

a = [1, 2, 3] b = [4, 5, 6] c = a + b print(c)

- Numpy atomic vector

- This is a vector, that can only store one type of data.

- Dataframe Statistics

We can get statistics from a dataframe using the describe() function

import pandas as pd df = pd.DataFrame({'a': [1, 2, 3], 'b': [4, 5, 6]}) print(df.describe())

2. Introduction

2.1. Systems

A system is a group of objects that work together to achieve a goal. It consists of two environments: the inside and the outside. Each entity in the system has attributes or can do activities. A state can be defined for the system or components of the system. Examples of systems include computer systems, forests, restaurants, and banks. The key components of a system are the entities, attributes, activities, states, and events. Systems can be either discrete or continuous, such as the people in a bank or the water levels in a river.

2.2. Models

A model is an abstraction of a real-life situation, where the complexity of the model increases with the number of variables that are taken into account. The simplicity or realism of a model is determined by how closely it reflects the actual situation it is representing.

2.3. Simulation

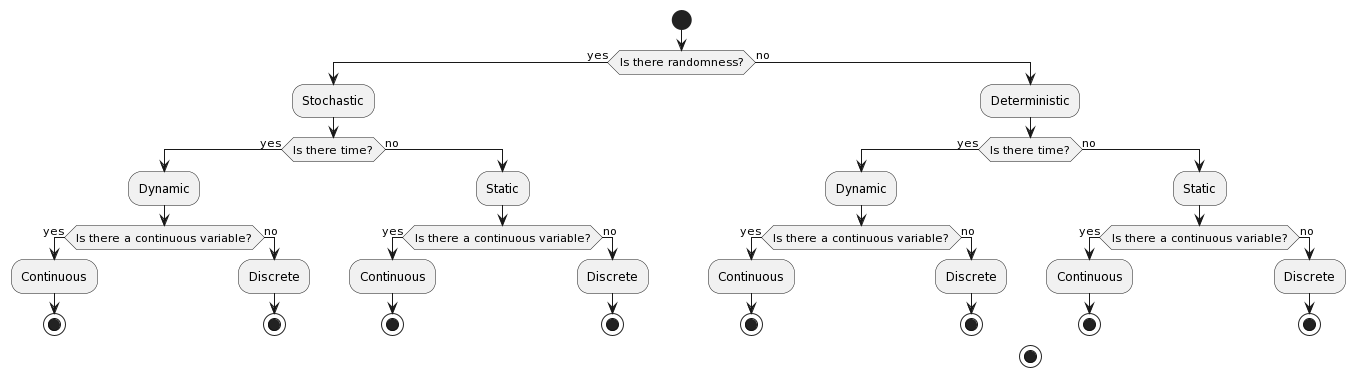

Simulation is a great tool to use when attempting to gain insights into the behavior of complex systems. Stochastic simulations rely on randomness to predict events, while deterministic simulations are based on predetermined inputs. For example, a restaurant/shop system can be simulated by making assumptions about the number of customers and employees in the system. Simulations are advantageous because they are cheaper, faster, replicable, safer, ethical, and legal when compared to real-life experiments. The decision between using a stochastic or deterministic simulation depends on the circumstances and the desired outputs.

- The point in which the state of a system changes is called an event.

2.3.1. Deterministic

In physics, determinism is the idea that all events are predetermined. There is no room for randomness or probability, as all changes are predetermined by the laws of nature. An example of this is an account gaining and losing followers, which is predetermined by the actions of the account holder. To simulate this process in Python, one could create a loop that tracks the number of followers gained and lost over time and stores it in a variable. This variable could then be used to print out the number of followers at any given time.

2.3.2. Stochastic

Stochastic simulation is a modelling technique which incorporates randomness, making use of random variables to generate a variety of possible outcomes. It is used for analysing complex systems in which the effects of randomness cannot be predicted deterministically, and thus provides a useful tool for predicting and understanding the behaviour of such systems.

2.3.3. Statics vs Dynamic

Simulations are typically classified as either static or dynamic. In a static simulation, there is no time variable; the system is unchanging and the same set of conditions is used throughout the simulation. In a dynamic simulation, time is a variable, meaning that the system is constantly changing and the conditions of the simulation can evolve over time.

2.3.4. Decision Tree

I hope this is right.

2.4. A Seed

A seed is any number that can be used to replicate semi-random experiments and simulations. It allows for the same experiment to be repeated in the same way, with the same conditions and results, by using the same seed each time. This makes it easy to compare results from different experiments and simulations, as the same starting point can be replicated.

3. Random Numbers Generation

- getting randomness is almost impossible

- People confuse randomness with strangeness

- Continuous distribution to discrete on range 0 to 1 - can be done by rounding

- Properties of pseudo-random numbers: uniform, independent, unpredictable

- Testing the randomness of a sequence of numbers:

- Look at the distribution of the numbers (visual) - should be uniform

- See if there is any pattern

- Algorithms:

- must be fast

- must be long

- should be repeatable with a seed

- Setting seed in python

random.seed(2023) - We can generate \(n\) random numbers with python by using

np.random.uniform(min,max,nax)

3.1. Linear Congruential Method

- We get a random like pattern.

- It is a linear transformation of a previous number

- Equation: \(x_{n} = (a x_{n-1} + c) \mod m\)

import numpy as np

def LCM(n, seed, a, c, m):

x = [seed]

for i in range(1,n+1):

x.append((a*x[i-1] + c) % m)

u = np.array(x)/m

return u

seq =LCM(n=8, seed=4, a=13, c=0, m=64)

print(seq)

- Those results are pretty bad

4. Testing Randomness

To check if we have an actually random generator, we need to test uniformity and independence.

4.1. Testing Uniformity

- We test using hypothesis testing

- Null hypothesis = sequence is uniform

- Alternative hypothesis = sequence is not uniform

- We use an alpha level of 0.05. If our \(p\) is less than 0.05 we reject the null hypothesis, otherwise we fail to reject the null hypothesis

- We want to fail to reject the null hypothesis to have uniformity.

- The test we use is Kolmogrov-Smirnov test

- We use the function

stats.kstestfrom thescipy.statslibrary

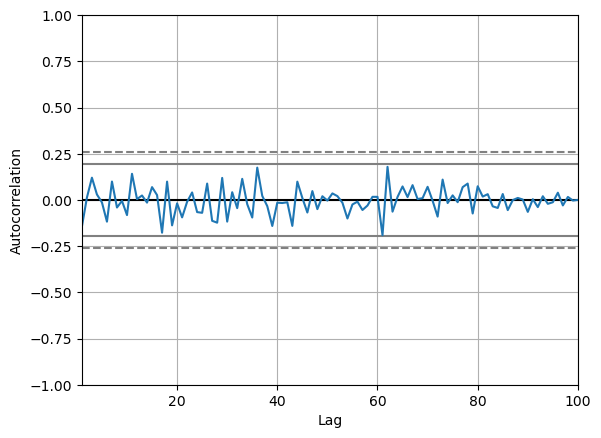

4.2. Testing Independence

- We again make use of hypothesis testing

- Null hypothesis = sequence is independent

- Alternative hypothesis = sequence is not independent

- To test for the dependence of each number, we use correlation

- The specific type of correlation we use is autocorrelation

- This means that we correlate the number and the sequence

- When we auto-correlate, we need to have a lag

- This is the number of steps we take ahead in the sequence

- We can use a pandas data frame:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

seq = np.random.uniform(0,1,100)

df = pd.DataFrame(seq, columns=['values'])

pd.plotting.autocorrelation_plot(df['values'])

plt.savefig("autocorrelation.png")

In the above plot:

- If the line is within the dashed lines, we fail to reject the null hypothesis

We need a more robust way of assessing if the sequence is independent:

acf, confint = statstools.acf(seq, alpha=0.05, nlags=10)

lbvalue, pvalue = statstools.q_stat(acf[1:], len(seq))

print("p-value: ", pvalue)

Now we can use the p-value to test for independence.

A key point here, is the difference between correlation and autocorrelation.

| Correlation | Autocorrelation |

|---|---|

| Correlates two variables | Correlates a variable with itself |

| No lag | Lag |

5. Discrete Random Variable Simulation

A random variable is a variable, with some potential outcomes, that is determined by their respective probabilities.

- Probability Mass Function

- This is a function that gives the probability of a discrete random variable taking on a specific value.

- Cumulative Distribution Function

- This is a function that gives the probability of a discrete random variable taking on a value less than or equal to a specific value.

5.1. Bernoulli Distribution

- This is a discrete random variable with two possible outcomes

- The probability of the first outcome is \(p\)

- The probability of the second outcome is \(1-p\)

- The general pmf is given by \(f(x) = p^x(1-p)^{1-x}\)

- Expected value and variance are given by \(E(X) = p\) and \(Var(X) = p(1-p)\)

- We can simulate this in python using

np.random.binomial(1,p,n)

5.2. Binomial Distribution

- Very similar to the Bernoulli distribution

- Key difference is that we have \(n\) trials

- The general pmf is given by \(f(x) = \binom{n}{x}p^x(1-p)^{n-x}\)

- Expected value and variance are given by \(E(X) = np\) and \(Var(X) = np(1-p)\)

- We can simulate this in python using

np.random.binomial(n,p,n_1)- This will give us \(n_1\) samples of \(n\) trials with probability \(p\)

5.3. Geometric Distribution

- This distributions gives us the probability of the first success in \(n\) trials

- The general pmf is given by \(f(X = x) = (1-p)^x p\)

- Expected value and variance are given by \(E(X) = \frac{1 - p}{p}\) and \(Var(X) = \frac{1-p}{p^2}\)

- We can simulate this in python using

np.random.geometric(p,n) - There is also the stats library which gives

stats.geom.pmf(x,p)andstats.geom.cdf(x,p)

5.4. Poisson Distribution

- This distribution gives us the probability of \(k\) events in a given time period

- The general pmf is given by \(f(x) = \frac{\lambda^x e^{-\lambda}}{x!}\)

- Turns into an exponential distribution when \(\lambda \rightarrow \infty\)

- \(\lambda\) is the mean number of events in the time period

- It can take negative values

- Values can be non-integer

- Expected value and variance are given by \(E(X) = \lambda\) and \(Var(X) = \lambda\)

- We can simulate this in python using

np.random.poisson(lam,n)- To compute the pdf we can use

stats.poisson.pmf(x,lam)

- To compute the pdf we can use

- Approximation

6. Continuous Random Variable Simulation

6.1. Cumulative Distribution Function

- This is a function that gives the probability of a continuous random variable taking on a value less than or equal to a specific value.

- The general cdf is given by \(F(x) = \int_{-\infty}^{x} f(x) dx\)

6.2. Uniform Distribution

- In this distribution, all values are equally likely

- The pdf is given by \(f(x) = \frac{1}{b-a}\)

- Expected value and variance are given by \(E(X) = \frac{a+b}{2}\) and \(Var(X) = \frac{(b-a)^2}{12}\)

- The cumulative distribution function is given by \(F(x) = \frac{x-a}{b-a}\)

- We can simulate this in python using

np.random.uniform(a,b,n)- We can get the pdf using

stats.uniform.pdf(x,a,b)

- We can get the pdf using

6.3. Exponential Distribution

- This distribution gives us the probability of the time between events in Poisson processes.

- It answers a question such as: "What is the probability that something will happen in the next n minutes?"

- The pdf is given by \(f(x) = \lambda e^{-\lambda x}\) where \(\lambda = \frac{1}{E(X)}\)

- Expected value and variance are given by \(E(X) = \frac{1}{\lambda}\) and \(Var(X) = \frac{1}{\lambda^2}\)

- The cumulative distribution function is given by \(F(x) = 1 - e^{-\lambda x}\)

- We can simulate this in python using

np.random.exponential(scale,n)- The scale is the inverse of the rate parameter \(\lambda\)

- We can get the pdf using

stats.expon.pdf(x,scale)or

6.4. Normal Distribution

- This distribution is the most common distribution

- The pdf is given by \(f(x) = \frac{1}{\sqrt{2\pi\sigma^2}} e^{-\frac{(x-\mu)^2}{2\sigma^2}}\) - This is horrible.

- Expected value and variance are given by \(E(X) = \mu\) and \(Var(X) = \sigma^2\)

- We can simulate this in python using

np.random.normal(mu,sigma,n)- We can get the pdf using

stats.norm.pdf(x,mu,sigma) - We can get the cdf using

stats.norm.cdf(x,mu,sigma)

- We can get the pdf using

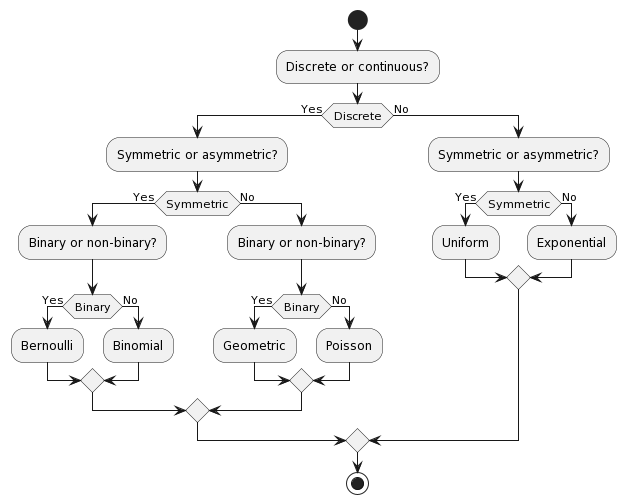

7. Choosing the right distribution

How do we know which distribution to use? We can use the following table to help us out.

| Distribution | Use Case |

|---|---|

| Bernoulli | Binary outcome |

| Binomial | Number of successes in \(n\) trials |

| Geometric | Number of trials until first success |

| Poisson | Number of events in a given time period |

| Uniform | All values are equally likely |

| Exponential | Time between events in Poisson processes |

| Normal | Most common distribution |

8. Monte Carlo Method

First, what is the Monte Carlo method? It is the aggregation of multiple simulations, to infer something. This should not be confused with the Monte Carlo simulation, which is a simulation of a random variable. Here is a table to help you remember the difference, it highlights the key differences between the two:

| Monte Carlo Method | Monte Carlo Simulation |

|---|---|

| A mathematical technique used for solving problems through repeated random sampling | A type of Monte Carlo method that involves generating random input values to simulate a system |

| Used to estimate the value of an unknown quantity based on a sample of random data | Used to simulate the behavior of a system under different scenarios |

| Can be used to solve problems in various fields like finance, engineering, and physics | Can be used to analyze the behavior of systems in various fields like finance, engineering, and physics |

| Can be used to generate random numbers, simulate random walks, and perform numerical integration | Can be used to answer the "what if" questions and incorporate a wider variety of scenarios than historical data |

| Samples are independent and identically distributed | Samples can be positively correlated and thereby increases the variance of your estimates |

| Can be used in combination with Markov chains | Can be used to estimate the probability of a particular outcome or the range of possible outcomes |

9. Monte Carlo Simulation

Now that we have a basic understanding of the Monte Carlo method, let's talk about the Monte Carlo simulation. This is a simulation of a random variable. We can use this to estimate the expected value of a random variable.

Characteristics:

- Randomness - has to have a large period between repeats (stochastic)

- Large sample

- Valid algorithm

- Accurately simulates

9.1. Process

- Define the domain of inputs (What kind of inputs are we going to use?)

- Generate the inputs from a distribution (How are we going to generate the inputs?)

- Run a simulation

- Replicate

- Aggregate

9.2. Using Python

We will often have to select some categorical value when it comes to MCS. In python, we can make use of numpy.random.choice() to do this. This function takes in a list of values and a probability distribution and returns a random value from the list. For example, if we wanted to simulate a coin flip, we could do the following:

import numpy as np print(np.random.choice(['heads', 'tails'], p=[0.5, 0.5]))

We do not have to give it the probability distribution, if we do not, it will assume that all values are equally likely. For example, if we wanted to simulate a die roll, we could do the following:

import numpy as np print(np.random.choice([1, 2, 3, 4, 5, 6]))

9.3. Inferential Statistics

- We use inferential statistics to make inferences about a population from a sample

- We simulate a sample, calculate the statistics and then use the statistics to make inferences about the population

10. Discrete Events Simulation

In this type of simulation, we model real-world systems as a sequence of discrete events. We can use this to model things like a manufacturing process, a supply chain, or a financial market. We can use this to answer questions like "What is the probability that a product will be delivered on time?" or "What is the probability that a customer will buy a product?".

We can also answer questions about how efficient a system is or howmany resources are needed to run a system. For example, we can answer questions like "How many employees are needed to run a manufacturing process?" or "How many machines are needed to run a manufacturing process?".

- Warmup Period

- This is a period of time where the simulation is preparing, data is being loaded.

10.1. Components

- Entities

- These are the objects that are being modeled. For example, in a manufacturing process, the entities could be products.

- Events

- These are the actions that are performed on the entities. For example, in a manufacturing process, the events could be the actions that are performed on the products.

- Resources

- These are the things that perform the events. For example, in a manufacturing process, the resources could be the machines that perform the actions on the products.

10.2. Types

- Activity Oriented

- We model the system as a series of activities

- Event Oriented

- We create various events

- Benefit: we can keep track of time

- Process Oriented

- Models entire life cycles

- Benefit: we can keep track of time and resources

10.3. simpy Library

We can create these simulations using simpy, a python library for discrete event simulation. We can install it using pip install simpy. We can then import it using import simpy.

- The type of simulation is process oriented

The structure of a simulation in simpy designed with functional programming is as follows:

- Define the environment

- Define the resources

- Define the processes

- Run the simulation

What is a process? A process is a function that defines the behavior of an entity in the simulation. For example, if we were simulating a manufacturing process, we could have a process that defines the behavior of a machine.

We make use of generators to simulate new entities entering the system. We can then use yield to wait for a certain amount of time or for a resource to become available. We can then use env.run() to run the simulation.

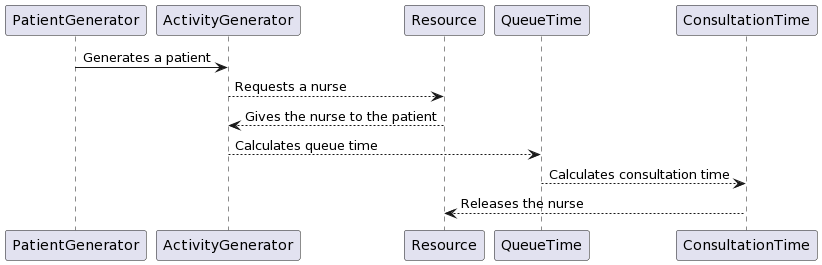

10.4. Designing Process

Let's take a look at a very simple example of a DES to learn how to use generators within discrete eventsimulations. The following graph describes a very simple experiment in which we simulate the queue ofpatients arriving at a weight loss clinic. We will have inter-arrival times of consultations, the entities aspatients, and the activity times will be represented by the consultation time of the patients with the nurse.

This is the process of how to design this simulation:

- create a patient generator that generates patients at a certain rate

- create an activity generator for each of the patients

- Request a resource (nurse)

- Create a queue time for the patient

- Create a consultation time for the patient

- Release the resource (nurse)

- Run the simulation

10.5. Example

Let's say we have a manufacturing process that has 3 machines. We want to know how many products we can make in a day. We can model this using simpy as follows:

import simpy

import numpy as np

env = simpy.Environment()

# Define the resources

machine = simpy.Resource(env, capacity=3)

# Define the processes

def manufacturing_process(env, machine):

# Wait for a machine to become available

with machine.request() as request:

# Wait for the machine to become available

yield request

# Wait for the manufacturing process to complete

yield env.timeout(np.random.uniform(0, 1))

# Run the simulation

env.process(manufacturing_process(env, machine))

env.run(until=1)

Midterm cutoff

11. Model Design

When we create a model, we have to consider three key things:

- The data

- When it comes to data, it is important to highlight how the data was collected. We also need to consider the quality of the data. We need to make sure that the data is accurate and that it is representative of the population.

- Response variable

- This is the variable that we are trying to predict. It is also known as the dependent variable.

- Explantory variables

- These are the variables that we use to predict the response variable. They are also known as the independent variables. Sometimes, there are explanatory variables that hurt the model, these are known as confounding variables.

11.1. Cross Validation

In order to have a good model, it is important not to mix the training and testing data. We can do this using cross validation. Unlike OpenAI…

This is done by splitting the data into 2 parts, the training data and the testing data. We then use the training data to build the model and the testing data to evaluate the model. We can then repeat this process multiple times to get a better idea of how the model performs.

Here are some common ratios for splitting the data:

| Training Data | Testing Data |

|---|---|

| 80% | 20% |

| 70% | 30% |

Why do we do this?

- Preventing overfitting

- If we use all the data to build the model, we will get a model that is very accurate on the training data. However, this model will not be able to generalize to new data. This is known as overfitting.

- Preventing underfitting

- If we use too little data to build the model, we will get a model that is not accurate on the training data. This is known as underfitting.

In python we can use the train_test_split function from the sklearn.model_selection library to split the data.

from sklearn.model_selection import train_test_split # Split the data into training and testing data X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

11.2. Cross Validation (LOOCV)

With this method, we go through the data one by one. We use all the data except for the current data point to build the model. We then use the current data point to evaluate the model. We then repeat this process for all the data points.

What this does is it assures a lower probability for bias and variance. In python, we can use the LeaveOneOut function from the sklearn.model_selection library to do this.

# Split the data into training and testing data

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

11.3. Cross Validation (K-Fold)

It is a bit similar to LOOVC but we split the data into k parts. We then use k-1 parts to build the model and the remaining part to evaluate the model. We then repeat this process for all the parts.

In python, we can use the KFold function from the sklearn.model_selection library to do this.

from sklearn.model_selection import KFold

# Split the data into training and testing data

kf = KFold(n_splits=5)

for train_index, test_index in kf.split(X):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

12. Regression Models

We start of with SLR (Simple linear regression). There are 3 key steps in this process:

- Build the model

- Evaluate the model

- Use the model

We have two types of basic models, deterministic and probabilistic:

- Deterministic model

- Describes an exact relationship between the independent and dependent variables: \(y = \beta_0 + \beta_1 x\)

- Probabilistic model

- It builds ontop of the deterministic model by adding a random component to the model: \(y = \beta_0 + \beta_1 x + \epsilon\)

12.1. Probabilistic Model

The random component is called the error term, it adds an element of randomness to the model. For an ideal model, the error term should be normally distributed with a mean of 0.

We mix this with a bit of statistics, we have the population parameters \(\sigma^2_\epsilon\), \(\beta_0\), and \(\beta_1\). The best we can do is use estimators: \(\hat{\sigma}^2_\epsilon\), \(\hat{\beta}_0\), and \(\hat{\beta}_1\).

12.2. Least Squares

Say we collect some data from a sample. We now want to build a model that best fits the data. We can do this by minimizing the sum of the squared errors. This is called the least squares method.

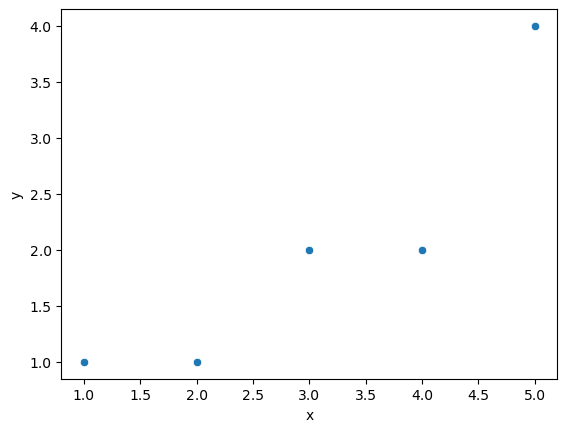

The first step in processing this data, is to create a scatter plot of the data. We can then draw a line of best fit through the data. To obtain the equation of that line, we can use the following formulas. We will be using a deterministic example.

The equation:

\[ \bar{y} = \hat{\beta}_0 + \hat{\beta}_1 \bar{x} \]

Sample data:

| x | y |

|---|---|

| 1 | 1 |

| 2 | 1 |

| 3 | 2 |

| 4 | 2 |

| 5 | 4 |

The formulas to compute \(\hat{\beta}_0\) and \(\hat{\beta}_1\) are as follows:

\[ \hat{\beta}_1 = \frac{SS_{xy}}{SS_{xx}} \]

\[ \hat{\beta}_0 = \bar{y} - \hat{\beta}_1 \bar{x} \]

Where:

\[ SS_{xy} = \sum_{i=1}^n (x_i - \bar{x})(y_i - \bar{y}) \]

\[ SS_{xx} = \sum_{i=1}^n (x_i - \bar{x})^2 \]

SS means sum of squares. We can compute these values in python using the numpy library.

Lets apply this to our example:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Create the data

x = np.array([1, 2, 3, 4, 5])

y = np.array([1, 1, 2, 2, 4])

# Create the dataframe

df = pd.DataFrame({'x': x, 'y': y})

# Create the scatter plot

sns.scatterplot(x='x', y='y', data=df)

plt.savefig('scatter_plot.png')

print("scatter_plot.png")

Lets compute the sample mean of x and y:

x_bar = np.mean(x) y_bar = np.mean(y) print(x_bar, y_bar)

3.0 2.0

We now have everything to compute the coefficients \(\hat{\beta}_0\) and \(\hat{\beta}_1\):

beta_hat_1 = np.sum((x - x_bar) * (y - y_bar)) / np.sum((x - x_bar)**2)

beta_hat_0 = y_bar - beta_hat_1 * x_bar

print(f"y = {beta_hat_0} + {beta_hat_1} * x")

y = -0.09999999999999964 + 0.7 * x

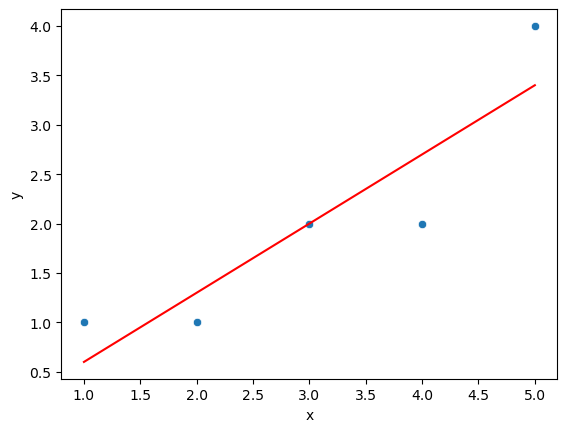

And we can plot this line of best fit:

sns.scatterplot(x='x', y='y', data=df)

plt.plot(x, beta_hat_0 + beta_hat_1 * x, color='red')

plt.savefig('scatter_plot_with_line.png')

print("scatter_plot_with_line.png")

With this, we have created a LSRL (Least Squares Regression Line). We can use this to make predictions. This kind of model, should primarily be used within the range of the data. If we want to make predictions outside of the range of the data, we should use a different model.

Confidence in the model can be measured using the coefficient of determination (\(R^2\)). This is a measure of how well the model fits the data. The closer the value is to 1, the better the model fits the data. The value is always between 0 and 1.

12.3. Random Error

Might have guessed already, the random error \(\epsilon\) is a distribution. Lets start with some assumptions:

- \(\epsilon\) is normally distributed \(N(\mu, \sigma^2)\)

- \(\epsilon\) is independent of \(x\)

What is left for us to figure out is the variance of epsilon.

- We know that it will be constant for all values of \(x\) (homoscedasticity) 1

We can use the following formula to compute the variance of \(\epsilon\):

\[ \sigma^2_\epsilon = \frac{1}{n-2} \sum_{i=1}^n (y_i - \hat{y}_i)^2 \]

If we return to our example, we can compute the variance of \(\epsilon\):

y_hat = beta_hat_0 + beta_hat_1 * x epsilon = y - y_hat epsilon_var = np.sum((y - y_hat)**2) / (len(x) - 2) print(epsilon_var)

0.36666666666666664

How do we interpret this result? We use the empirical rule, which tells us that 95% of the observed \(y\) values will be within 2 standard deviations of the LSRL.

With this information, we can build a simple confidence interval: \((\hat{y} - 2\sigma_\epsilon, \hat{y} + 2\sigma_\epsilon)\) which tells us that 95% of the observed \(y\) values will be within this interval.

Even better, we can calculate the mean error:

\[ me = \frac{s}{\bar{y}} * 100 \]

me = np.sqrt(epsilon_var) / y_bar * 100 print(me)

30.276503540974915

From this number, we can infer that ~30% of our estimates are off.

Summary of assumptions for the error:

| Assumption | Description |

|---|---|

| 1 | \(\epsilon\) is normally distributed \(N(0, \sigma^2)\) |

| 2 | \(\epsilon\) is independent of \(x\) |

| 3 | \(\epsilon\) is homoscedastic (constant variance) |

| 3 | The influence of some \(y\) on \(\epsilon\) does not influence any other value \(y_1\) |

12.4. Adequacy

- We use a t-test if the error is different from 0

12.5. Usefulness

- r2 is a measure of how well the model fits the data

12.6. AIC and BIC

12.7. Prediction

13. Classification Models

Footnotes:

This is a fancy way of saying that the variance is the same for all values of x